Do you use MCP to standardize connections between your LLMs/Agents and external services?

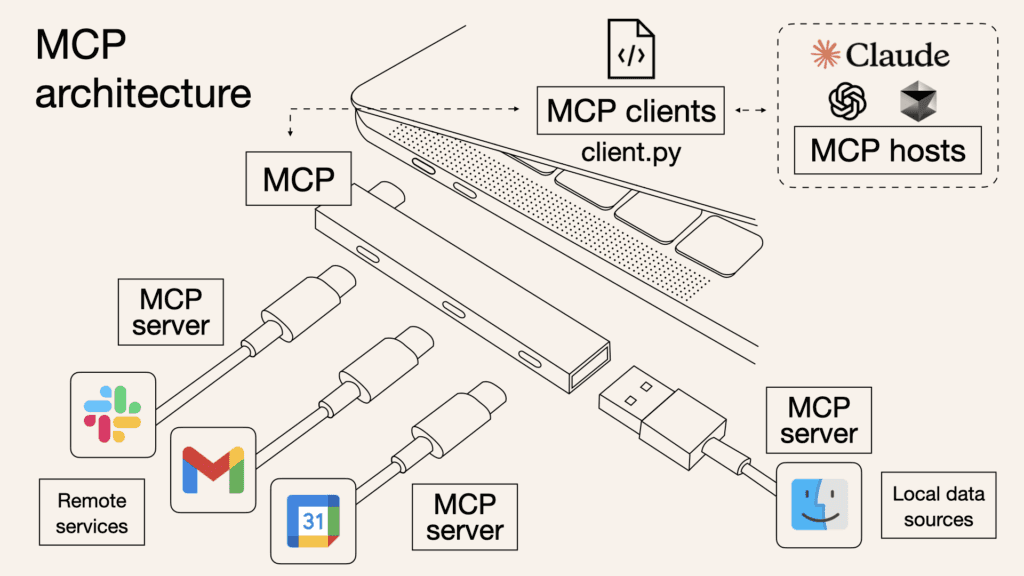

Figure: MCP Architecture (Image Credit: Norah Sakal)

Figure: MCP Architecture (Image Credit: Norah Sakal)

Connecting an LLM-driven agent to multiple external services might look simple in a diagram, but it's often a nightmare in practice. Each service requires a custom integration, from decoding API docs, handling auth, setting permissions, to mapping strange data formats. And when you build it all directly into your agent or app, it becomes a brittle, tangled mess that's impossible to reuse.

What is MCP?

MCP (Model Context Protocol) is a standardized way for LLMs and agents to interact with external APIs and tools—think of it as USB-C for AI. Instead of manually wiring integrations into each agent or app, MCP centralizes them on a server. Agents speak a single protocol, and the server handles:

- Authentication and authorization (OAuth 2.1 supported)

- Data formatting and transformation

- Service-specific quirks and rate limits

This architecture separates logic and plumbing from your agent, making development faster, integrations cleaner, and reusability a breeze.

MCP Architecture Breakdown

Three main components:

1. Agent (Client): The tool that wants to use backend services. E.g. an IDE, app, or LLM.

2. MCP Server: The smart middleman that connects to APIs, handles logic, and enforces security.

3. Backend API: The actual external service like Slack, GitHub, or your internal CRM.

Flow: Agent ⇨ MCP Server ⇨ External API

Why you should use MCP

✅ Write Once, Use Anywhere: Build an integration once in the MCP server and point any MCP-compatible app at it. ✅ Keep agents lightweight: Agent logic stays clean and generic. ✅ Dynamic discovery: Models can see available tools and prompts at runtime, without needing hardcoded instructions. ✅ Improved reliability: Core logic lives in server code, not fragile prompt text. ✅ Chaining tools: Create powerful workflows by chaining steps (e.g. summarize a spreadsheet, post result to Slack) as a single tool.

Your LLM needs to talk to Slack, GitHub, and your internal support ticketing system. Without MCP, you'd build 3 custom plugins. With MCP, you build 3 connectors into the MCP server, and every agent instantly gets access.

Tools, Prompts, Resources – All in One Protocol

The server can expose:

- Tools: Callables like

sendSlackMessage,createGitHubIssue - Prompts: Custom behavior templates, like a "friendly escalation email"

- Resources: Contextual data models, documents, or knowledge graphs

The agent can offer:

- Roots: File access when needed

- Sampling: Let the server trigger generation tasks

All communication is standardized, secure, and discoverable.

Who's already using MCP?

- Anthropic invented it.

- Google, Microsoft, and OpenAI are backing it.

- Zapier wrapped 7,000+ SaaS integrations as an MCP gateway.

- IDEs like VS Code, Cursor, and JetBrains are MCP-native.

- Docker, Postman, GitHub are building servers.

USB-C for AI: Not Just a Buzzword

Like USB-C unified device ports, MCP is set to unify LLM integrations. The protocol evolves openly, adoption is growing, and contributors span the AI ecosystem.

Betting against MCP is like betting against standards that make life easier.

How to get started

Whether you're building the agent side or the server:

- 🛠️ Server Quick Start: Perfect for devs making reusable tools.

- 🧠 Client Quick Start: If you're building apps that want to call those tools.

- 📚 Browse example servers and client list to see the growing ecosystem.

Try it yourself

Want to get hands-on with MCP? Here's how to get started with one of the useful MCPs called Context7:

LLMs rely on outdated or generic information about the libraries you use. Context7 pulls up-to-date, version-specific documentation and code examples directly from the source. While using AI code editors like Cursor or VSCode, include the phrase "use context7" in your prompt, and it'll automatically retrieve relevant information from official sources. This helps AI assistant generate accurate and current code snippets.

- Step 1 - Go to context7 repo and read the setup guide (Cursor, VSCode, Claude Desktop, etc) https://github.com/upstash/context7

- Step 2 - Once installed, try asking a question using context7 Example: In your Chat panel, type: "Add tailwind to my project. use context7"

✨ Note: Make sure you're in Agent Mode

Cursor/VSCode will auto‑discover two tools exposed by Context7: resolve-library-id ➜ get-library-docs. It resolves "tailwind" to the latest compatible ID and injects concise, version‑accurate code snippets into the conversation

- Step 3 - Verify that tailwind (version 4) is added

- Step 4 - Try these steps without using context7 and compare your results.

What you just proved

By using context7, you are referring to the latest docs, and it will reduce bugs and errors in your code. Context7 is just one of many MCP servers you can use to improve your experience with LLMs. There are other great tools such as GitHub MCP, Browser Use MCP (Page navigation, form filling, reading console messages), Sequential Thinking, and Playwright MCP (helps with automate testing and browser interactions). More servers can be found here: https://www.pulsemcp.com/servers

Need help connecting your proprietary services?

📩 Reach out to SSW – we'll help you build a robust, scalable MCP server.